[This is a sponsored article with Microsoft.]

Most of us who use AI chatbots are probably self-taught since they’re meant to be intuitive to use.

You type in your demands, get it to produce something, tweak its suggestions along the way, and improve your prompts over time as you understand what the AI can and can’t do.

It’s straightforward, right?

So, when I was invited to an AI masterclass by Microsoft Malaysia (Microsoft) on using Microsoft Copilot (Copilot), I couldn’t help but wonder: What could they teach me that I didn’t already know?

What I already knew about Copilot

I’ve been familiar with how Copilot works across Microsoft 365 apps, from summarising key discussion points during a Teams meeting to creating sleek pitch decks in PowerPoint.

The idea behind Copilot is to help users increase their productivity, by helping us focus on the work that really matters, instead of the tasks that bog us down.

I’ve seen these features highlighted in new Windows laptops, some even with a dedicated Copilot key.

But with Microsoft embedding Copilot so deeply into its ecosystem, I overlooked its own chatbot. The workshop, however, showed me how Copilot could become the go-to productivity assistant for content creators.

What stood out to me from the hands-on session

We were set up with Copilot-enabled devices, ready to dive in and get hands-on. We explored various use cases of Copilot, from everyday tasks to those specific to content creators, such as:

Coming up with recipes from leftovers in the fridge

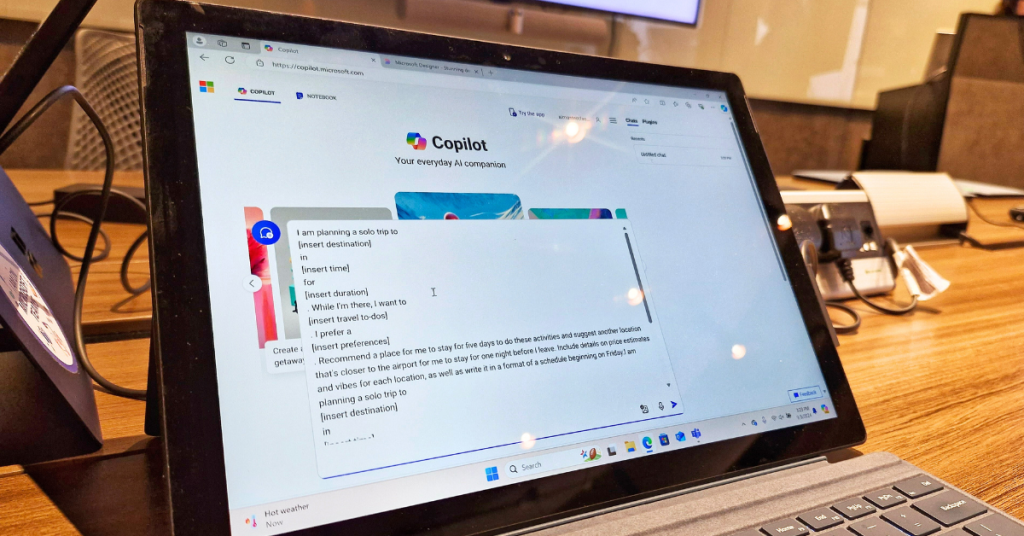

Planning events and trips

Generating posters with Microsoft Designer

Simplifying complex concepts into easy-to-understand explanations

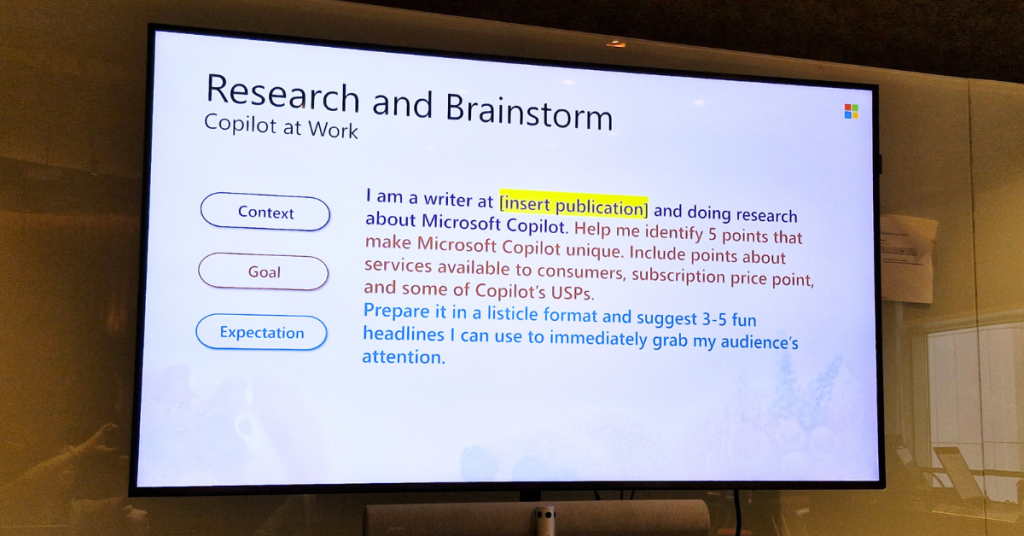

Brainstorming headlines and angles for a story

It’s basic stuff, the examples above.

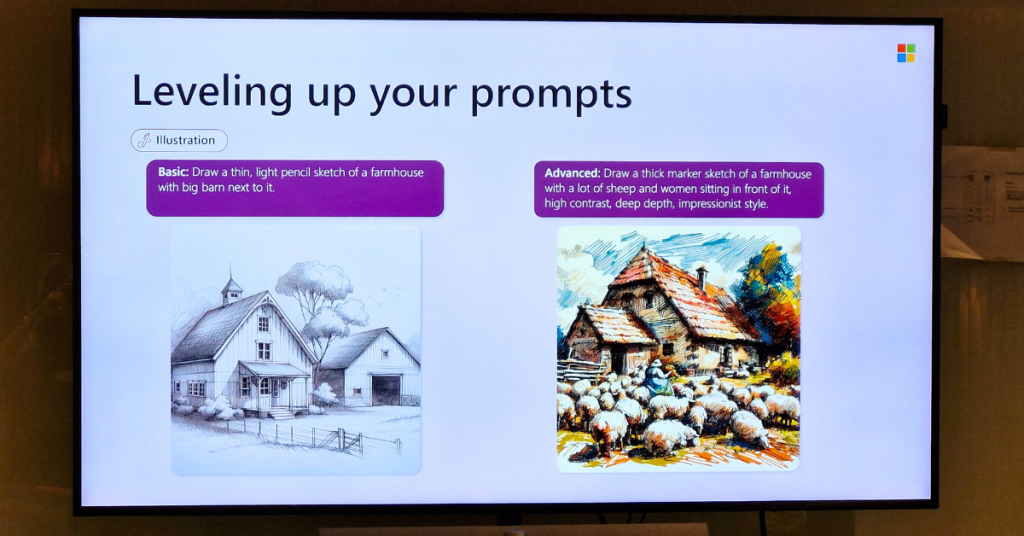

But throughout the demo, Celeste Bolano, Communications Lead at Microsoft Malaysia reminded us of essential prompt-creating tips to get the results we wanted.

She broke it down into three points:

Always have an action for what you want Copilot to do

Provide any relevant context or details

Tailor the delivery for how you want Copilot to craft your response

What stood out to me in particular was how detailed Copilot’s content generation was.

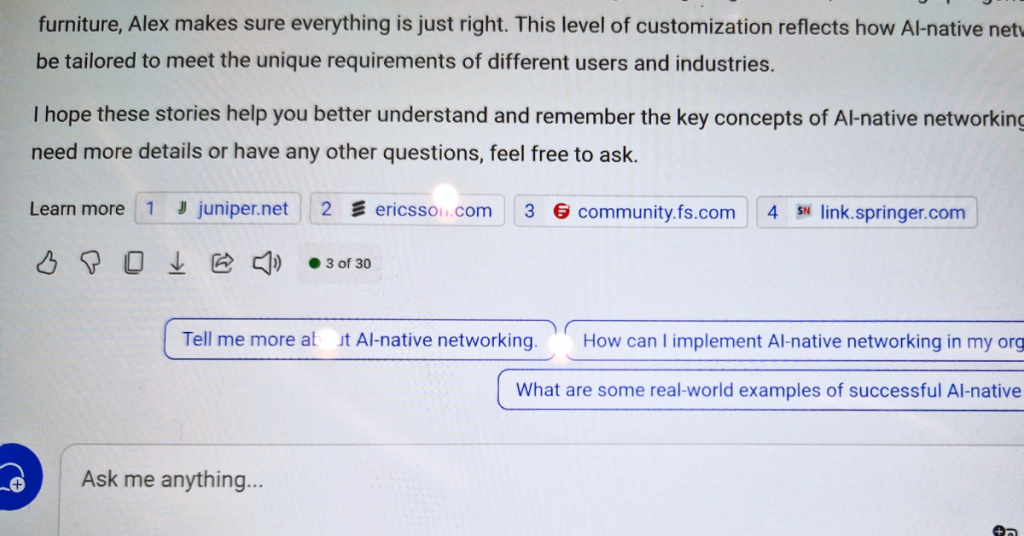

When I tested it out for breaking down difficult concepts, not only did Copilot provide the content I needed, but it also included references and links to the sources. This made fact-checking a breeze, ensuring I could verify everything and decide if the AI’s suggestions were accurate.

As a content creator myself, fact-checking is a fundamental best practice, even when AI is involved. This is to ensure that the content we publish is factual and accurate, and this feature made Copilot stand out compared to other mainstream chatbots.

The seamless integration across Microsoft’s ecosystem was also impressive.

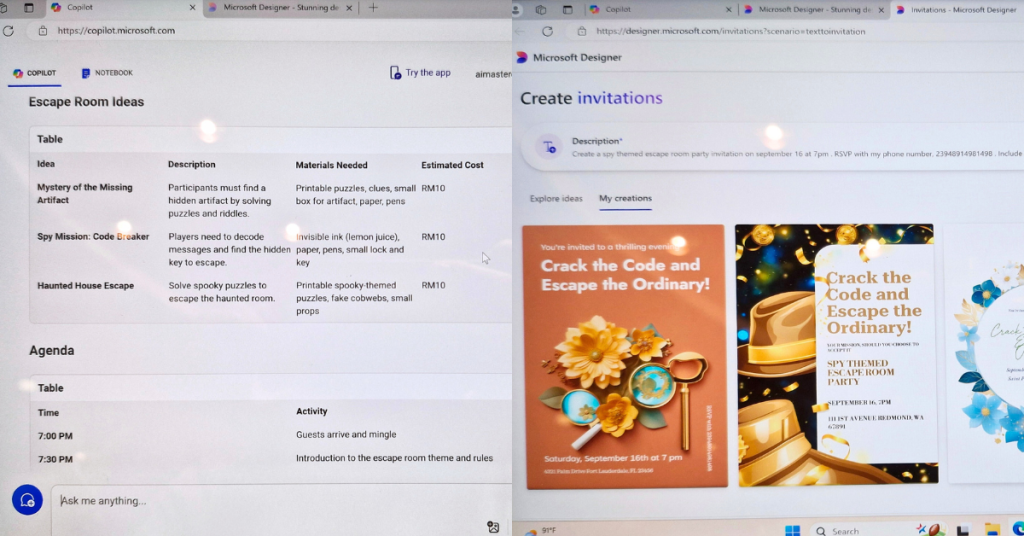

In another demo, we used Copilot to plan a party. Taking the event idea and schedule suggested, we could easily transfer everything to Microsoft Designer to create a poster.

The design elements—fonts, vectors, etc.—were customisable, making it a handy tool for event planners or social media marketers needing quick designs that align with their copy.

As we played around with the built-in design software, though, one question stuck with me: Are these AI-generated content safe to use?

What Microsoft had to say about using AI safely

AI is often seen as either a game-changer or a potential threat, with people torn between excitement and uncertainty about the implications of using AI.

With ethical and legal concerns in play, could publishing AI-generated content land creators in hot water?

To this, Adilah Junid, Director of Legal and Government Affairs from Microsoft Malaysia told us, “We acknowledge that while transformative tools like AI open doors to new possibilities, they are also raising new questions.”

To elaborate, Microsoft incorporates provenance technology in Copilot and Microsoft Designer, which enables the source and provenance of generated content to be identified. The company also adopts responsible practices when developing its AI tools, as per the publicly released Microsoft Responsible AI Standard.

Understanding the challenges that lie ahead with AI, Adilah shared:

“At Microsoft, we are guided by our AI principles and Responsible AI Standard along with decades of research on AI, grounding, and privacy-preserving machine learning. We make it clear how the system makes decisions by noting limitations, linking to sources, and prompting users to review, fact-check, and adjust content based on subject-matter expertise.”

Adilah Junid, Director of Legal and Government Affairs at Microsoft Malaysia

Stay curious, stay cautious

In a recent collaborative release with LinkedIn, Microsoft highlighted in the 2024 Work Trend Index that over 75% of knowledge workers are already using AI at work. This figure is even higher in Malaysia, with 84% of knowledge workers already using AI at work.

For context, knowledge workers are those who apply analytical and theoretical expertise, typically acquired through formal education, to develop products and services.

“Employees, many of them struggling to keep up with the pace and volume of work, say AI saves time, boosts creativity, and allows them to focus on their most important work,” the report detailed. As a writer needing to stay creative and turn ideas into articles, I can relate.

Exploring Copilot revealed just how useful generative AI can be for content creators.

But, as with any new tool, it’s important to use it mindfully and stay aware of its potential impact. The future with AI looks promising, but it’s up to us to steer it in the right direction.

So, if you’re curious, give Copilot a go and see how it fits into your creative process. It’s free to use on browsers like Chrome and Microsoft Edge, the only thing you’ll need is a Microsoft account to get started.

Learn more about Microsoft Copilot here.

Read other articles we’ve written about AI here.

Featured Image Credit: Vulcan Post

.jpg&h=630&w=1200&q=75&v=20170226&c=1&w=120&resize=120,86&ssl=1)